Bob Green is a Professor of Forensic Science at the University of Kent in the UK and is noted for his contributions to forensic science. Awarded an OBE in 2008 for his services to forensic science, he is also a Senior Fellow of the Higher Education Academy. As a committed educator within the university’s forensic science program, he imparts his expertise to students. As a Fellow and Vice President of the Chartered Society of Forensic Sciences, he is dedicated to supporting the profession. His passion lies in ensuring the highest quality of education and guidance in the field of forensic science.

Over the years, I’ve come to realise that the relationship between diversity in education and student engagement is particularly close. Hence – this is just one of several ideas on engagement that sprang out of my work to achieve Diversity Mark accreditation for the modules I teach.

Of course, module evaluation is an important aspect of continuing quality improvement in higher education (Setiawan & Aman, 2022). However, it is common for module evaluation to be circulated at either the end of the term/module or academic year. The feedback obtained from students is then analysed and fed back to the lecturer/Module Convener. It strikes me that these module/year evaluations may have several limitations concerning the drive for constant improvement. Firstly, it’s not uncommon for students to be showered with evaluation requests from various modules. Especially so as end-of-year evaluation can coincide with other assessment deadlines. It’s been my experience that some students may not have the time or the willingness to write much or provide constructive opinions about their experience. Hence the response rates for end-of-year module evaluation are often low and not entirely representative of the cohort. Low levels of student evaluation can also pose challenges in influencing change and improving educational outcomes. The lack of comprehensive feedback from students through evaluations can hinder the understanding of issues that need to be addressed and the identification of areas for improvement (Zhao et al. 2022). Low response rates can also call into question the validity of the data and the representativeness of the student voice. Accordingly, the comments and feedback obtained from end-of-year module evaluation may have limited usefulness in promoting concurrent change. Negative comments about teaching styles and course structure, for example, may no longer be useful because the module has been taught and the teacher/student interaction (following from those comments) has come to an end. And so, only with time will the feedback be useful when review is done periodically. It is, of course, accepted that the data obtained from end of year module evaluation may be used to make comparisons and to see if there is any significant change or improvements in the quality of the course over time. On the other hand, concurrent module evaluation can influence changes to be taken, based on the feedback obtained, while the module is ongoing. This method enables us to quickly review and learn from the ‘student voice’ instantly. Using this method to support the end-of-term evaluation better enables changes/improvements to the module content by considering the feedback from both (a) current and (b) previous students. Reviewing the literature, there appears an emerging view that focuses on a formative evaluation through the use of real-time evaluation techniques in which feedback is sought throughout the duration of the module (Dawson et al.2021).

Inclusive learning – feedback.

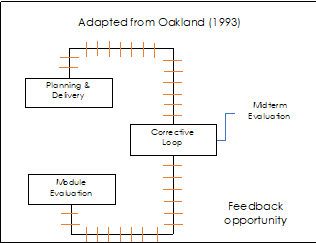

Accepting that this approach reflects a significant change from more traditional methods; neither is it put forward as a ‘one size fits all’ option, nevertheless – I have found that it offers some significant advantages, quickly pinpointing and addressing learning and teaching issues as they arise, rather than waiting until the end of the academic year. Furthermore, it provides us with more ‘intervention points’ as can be summarised in the illustration above. This more immediate feedback loop enables a speedier resolution of problems, for example, confusing module components. A personal opinion is that quickness of pace is key and often supports the year-end assessments. Moreover, it’s been my experience that continuous feedback and reflection cycles encourage a culture of continuous quality improvements.

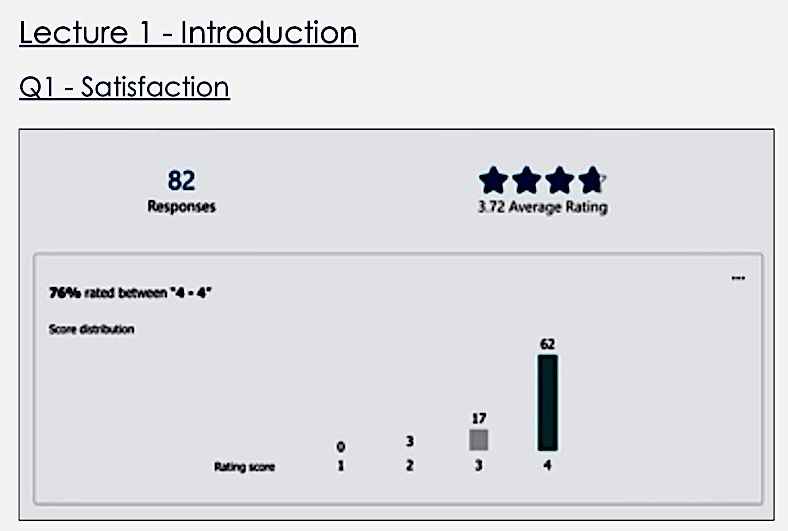

But above all, prioritising student voice in real-time assessment underscores the importance of their opinions, empowering them to witness the impact of their feedback instantly. To restate, I’ve experienced how these benefits ultimately enhance the student learning journey and teaching quality. Once again, the focus of concurrent evaluation is targeted towards improving the current student cohort’s learning experience by swiftly addressing issues during ongoing modules. Accepting fully the value of reflective evaluations, real-time evaluation can be valuable in driving module evolution for the best student experiences. What is more, integrating both concurrent and reflective feedback (end of module) opinions as the joint, methods of evolution, seems to make sense, by supporting quality enhancements for both current and future students. An illustration from my real time feedback ‘dashboard’ can be seen above. In short, along with, further responses I’m able to gauge how things are going, week on week.

The Process

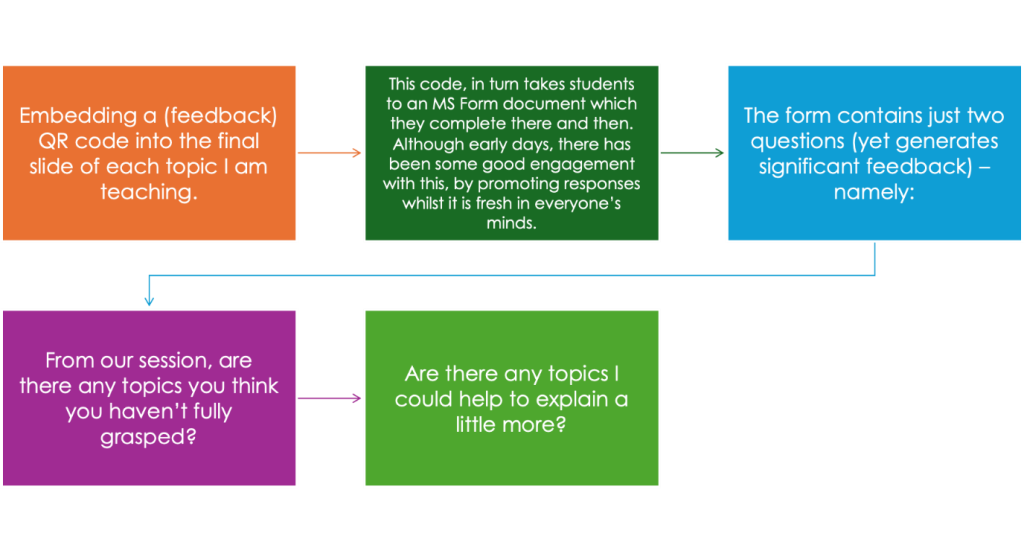

The simple changes made, operationalise the way in which the student voice is gathered. In short, it enables (a) more frequent and (b) closer inspection, topic by topic analysis and, (c) when necessary and possible, correction. The illustration (page 2) summarises how the inclusion of topic-by-topic feedback provides opportunities to implement concurrent changes (that affect current students) to shape the module content as well as involving students both in the process of regular feedback and enabling them to see how any changes made are delivered. When changes cannot be accommodated, explaining the reason why. These changes embed ‘student voice into’ module content, week by week, making it clear, that their “…opinion is valued”. This approach enables me to take feedback more regularly and to provide additional support.

The approach is already generating a good degree of concurrent follow-up and interaction, helping to identify topics that students would like to be explained a little deeper, differently, or more clearly. The simple process is summarised in the five points, below, essentially using QR codes to link with the feedback (MS Forms), anonymously and at the time. Where students have asked questions or requested clarity, the very quick anonymous feedback, generated by a ‘cut and paste’ from the MS Form is fed back to the class. The ability to ask questions anonymously appears both in practice and from the literature (Yu, F. and Liu, Y. 2009) to ease embarrassment or unease by providing a more detailed response, in what is sometimes termed, a psychologically safe learning environment. The information and feedback is shared via our online teaching platform, Moodle – week by week showing that their feedback is being acted upon and the ‘student voice’ is being heard.

And so, it seems that the idea may have some merits. For example, feedback generated by this method attracts around 150 interactions, during the course content (26 hours). In comparison, the end of your module survey often attracts feedback in single figures. Furthermore, the end of module, reflective feedback provide few opportunities for improvement/change for students currently studying.

Conclusion

These ideas are not a ‘one size fits all’ or perhaps to everyone’s palate but nevertheless this is just one example of how the implementation of Diversity Mark led to wider improvements. I’m hoping to be able to share some more of these with you in the fullness of time. So, to conclude, this short article puts forward the suggestion that both concurrent and reflective model evaluation provides a more holistic approach to promote continuing quality improvements in some HE settings. The idea shows how the student voice can be used to drive concurrent improvements as well as providing a safe, learning environment for students to question. Above all, these are just a few ideas in an attempt to get the most out of Diversity Mark’s implementation and the quality improvements it undoubtedly promotes.

References:

- Dawson, P., Carless, D., and Lee, P.P.W., 2021. Authentic feedback: supporting learners to engage in disciplinary feedback practices. Assessment & Evaluation in Higher Education, 46(2), pp.286-296.

- Karam, M., Fares, H., & Al-Majeed, S., 2021. Quality assurance framework for the design and delivery of virtual, real-time courses.

- Setiawan, R. and Aman, A. (2022). The evaluation of the history education curriculum in higher education. Paramita: Historical Studies Journal, 32(2), 263-275.

- Trabelsi, Z., Alnajjar, F., Parambil, M.M.A., Gochoo, M. and Ali, L., 2023. Real-time attention monitoring system for classroom: A deep learning approach for student’s behaviour recognition. Big Data and Cognitive Computing, 7(1), p.48.

- Yu, F. and Liu, Y. (2009). Creating a psychologically safe online space for a student‐generated questions learning activity via different identity revelation modes. British Journal of Educational Technology

- Zhao, L., Xu, P., Chen, Y., & Shi, Y. (2022). A literature review of the research on students’ evaluation of teaching in higher education. Frontiers in Psychology, 13.